Tinkering with Linux on mobile devices quite a bit, the growing pile of Linux tablets and phones on my desk often looks underutilized. I wish I could wire some of those lonely black slabs together and extend my monitor, or why not, use them as controllers for my desktop windows in a half PARC Tab, half Stream Deck fashion [0].

Yet if any reasoning adult will know that USB-C looking symmetric does not imply it is also very useful to transfer things between peer devices, I still think that using my tablet as a secondary screen through Wi-Fi would be a lifesaver e.g. while travelling, saving the dead weight for a portable monitor in my backpack.

A first prototype

Some time ago, I hacked together a rudimentary Python script to make this possible. This script creates a virtual monitor in GNOME via D-Bus, records it [1], and pipes it into a network stream.

TuxPhones / side-displays - GitLab

After a bit of tinkering with video encoders, this tool achieved fairly low latency (~500ms-ish) by e.g. switching from standard TCP streaming to raw H264 packets over UDP. So I posted my experiment on Twitter [2], receiving quite a bit of positive feedback, which motivated me to write this tutorial:

Using a Linux phone as a secondary monitor - TuxPhones.com

And in turn, after this article was read ~40k times in the week I published it, I deemed it worthy of being rewritten from an ugly hack into a more meaningful project. Which, to noone’s surprise, turned out to be a much deeper rabbit hole than I had anticipated.

The Mirror Hall app

Let’s spoil the ending already: after a lot of experiments experiments, now we can say there’s a GNOME app for that! Well, sort of. Please keep reading.

Mirror Hall [beta]

Mirror Hall [beta]

Download Flatpak bundle (with libav/x.264)

Install via Flathub (no libav/x.264, slower)

Browse sources and report issues on GitLab

Mirror Hall 0.1 is a big experiment, so please don’t expect it to be anywhere close to production-ready for now. Given the tight schedule I have in the weekends for my side projects, expect its future development to be pretty slow.

Unfortunately, US laws do not allow us to serve x.264 on Flathub due to patent issues. Although hardware-based H264 is available in the Flathub package via VAAPI, this turned out to be slower and slightly less reliable than the x264/libav combo this app was conceived with, and which you can still find by installing the bundle in the first link.

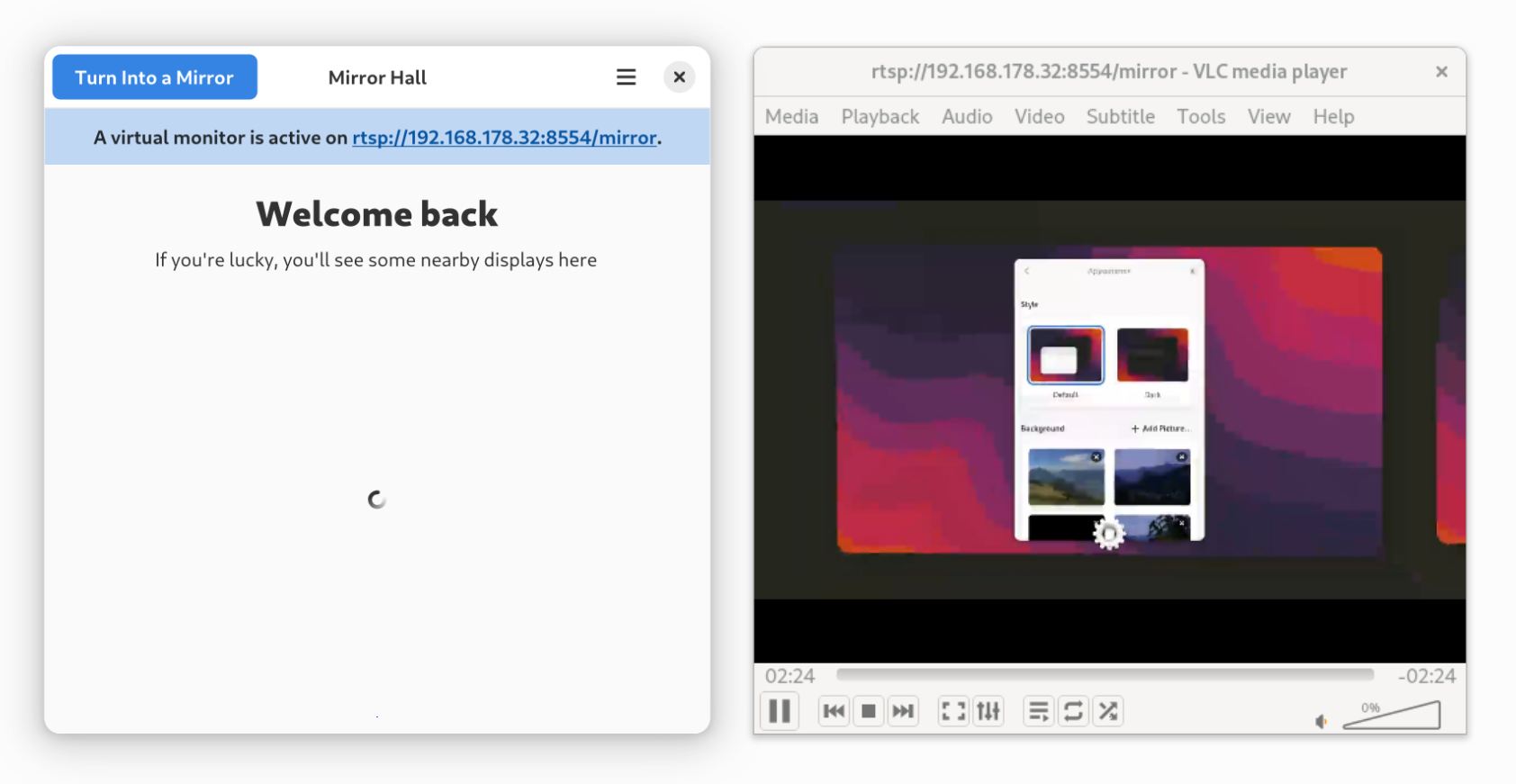

What it looks like

At a high level, Mirror Hall is a blend of a wireless video streamer (think of Chromecast or GNOME Network Displays), a network video player (sort of a modified Miracast sink), and a virtual display maker for GNOME desktops. Here’s what it looks like:

The app has two symmetric modes you can flip between. At launch, Mirror Hall opens in Stream Mode and detects peers in your network. If you switch role, Mirror Mode will start accepting video streams from your friendly neighbours.

If you’re wondering what it actually feels like to use, this early demo from 2023 was run from a Dell XPS 9370 (2018) and streamed to my Purism Librem 5 over Wi-Fi, achieving very low latency in spite of the relatively slow CPU and networking capabilities of this phone.

In case you were wondering, the other way around works as well! As eccentric as it sounds, you can use a Linux desktop as a giant second screen for your Linux phone, as long as you run GNOME Mobile on it.

How It’s Made

Since we already gave the Flatpak as a quick snack for impatient readers, we can now delve into to the interesting bits.

Under the hood, Mirror Hall implements a fully open-source stack:

- GStreamer as the underlying video infrastructure doing all the heavy lifting for for encoding, decoding, packing, and streaming;

- mDNS (

zeroconf) to advertise the Mirror Hall devices on the network; - H.264 video over raw UDP packets as the video standard to minimize latency and adopt a commonly hardware-assisted codec.

On the front-end, things are fairly standard. We have GLib as the means to interact with Mutter’s D-Bus APIs, and the GTK4 / libadwaita combo as our loyal UI framework.

libmirrormaker - creates virtual extended monitors

In the times when Xorg still reigned supreme, agnostic virtual video sinks (monitors) were pretty easy to create. Tools like xrandr and Xvfb made this task a breeze on e.g. Intel GPUs, yet with Wayland, this has become far from straightforward.

To the bone, libmirrormaker is a tiny library that tries to create a virtual monitor on the DE you are using. Currently, we only support GNOME and its Mutter window manager, since it is to the best of my knowledge the only major WM to expose an API to create virtual desktops. This means that our app will only work on GNOME and other Mutter-based environments such as Budgie, waiting for other DEs to expose similar APIs.

Luckily, a few Linux desktops are starting to expose their own D-bus APIs to create virtual second displays. But there is no standard API for that, and only Mutter and Sway support it at the time of writing.

- GNOME and Mutter have added support for headless native backend and virtual monitors since version 40

- On KDE/KWin Wayland, we have

kwin_wayland --virtual, but it looks like it is not possible to use it for this use case, as it is solely a debugging endpoint. - On Sway, there is a headless backend API

- On Xorg, something similar could be technically achieved via xrandr/Xvfb depending on the GPU

Having said that, the trick for creating a Mutter virtual screen is fairly simple [3]:

- Call

CreateSessionD-Bus endpoint onorg.gnome.Mutter.ScreenCastto create a virtual headless sink, - Call the

RecordVirtualendpoint onorg.gnome.Mutter.ScreenCast.Sessionto record the newly created session - Wait for a PipeWire stream to be created by listening to signal

PipeWireStreamAddedonorg.gnome.Mutter.ScreenCast.Stream - Capture the signal and feed the new PipeWire object to GStreamer’s

pipewiresrc

After this, you will be able to do any fun manipulations inside GStreamer.

libpipeline - compresses, packs, and sends stuff around

Once libmirrormaker creates a virtual device, we need to grab the PipeWire stream, do a bunch of encoding shenenigans, and broadcast it over the network. Luckily, gstreamer will do all the heavy matrixy math for us, as long as we meticulously specify how our pipeline is to be wired up.

MirrorHall’s sender pipeline roughly works as follows:

pipewiresrcgrabs the stream,- An encoder such as

vaapih264encorx264turns it into H.264 [4], rtph264payencodes the raw H.264 stream into a sequence of RTP packetsudpsinkstreams the raw UDP H.264 packets to the network

Moreover, we use a bunch of queues in the pipelines to do video buffering. We chose to use rtph264pay and raw UDP as in our tests it performed considerably faster than the pre-cooked rtpsink (measured ~60-120ms vs. ~1s latency), although migrating to it in the future might be a wise choice and enable support for third-party clients.

On the receiving end, we basically have the same pipeline in reverse. Taking an incoming stream from udpsrc, unwrap and decode it, and feeding it to a video player widget based on the new Rust-based gtk4gaintablesink. We also support clappersink as an alternative player backend.

libcast - negotiates the connection and advertises devices

Finally, we have a wrapper around the mDNS protocol to provide network scan and advertising for a custom MirrorHall device type over the network in a Chromecast-like fashion. libcast handles basic features like advertising and implicit negotiation of resolution and codecs supported by the receiver.

However, this mDNS implementation is still a bit quirky, and e.g. cannot always advertise the right route when you are connected - as is very common - to multiple network interfaces at the same time. Mirror Hall tries to advertise multiple network addresses to work around this issue, but it is far from foolproof and maybe not ideal on a privacy level.

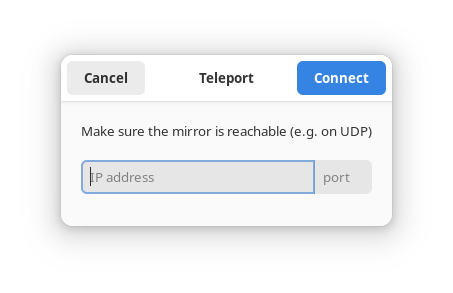

Finally, if a grumpy firewall blocks mDNS on your network, you can still knock at your neighbour’s door directly.

Some tricks for better performance

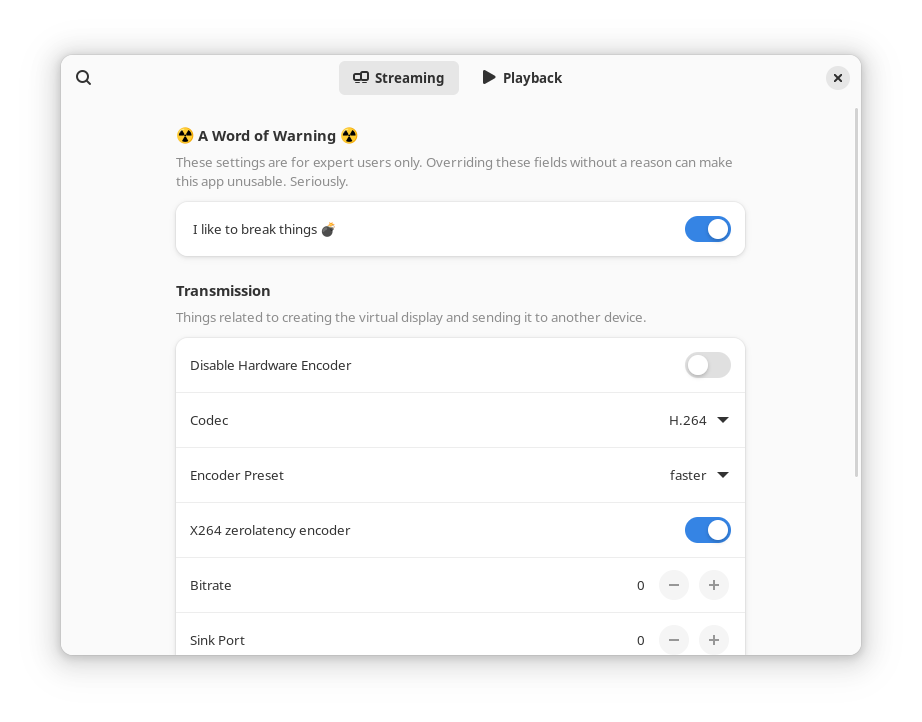

Contrarily to most tools, Mirror Hall sends H.264 video over a raw UDP stream. This allows for much better performance at the expense of video artifacts on unstable connections. This is a compromise to make sure you can actually use your mouse and keyboard with very reasonable lag.

On the encoding side, we make some use of x264’s profiles, which we brewed according to mere trial and error scientifically(TM). For instance, we set a maximum bandwidth of 6.5MBit per second for 720/30fps streams, slow enough for most networks to flow around but fast enough to stream H.264, and use tricks like x264’s fast encoding profile (less glitchy than ultrafast at the expense of some milliseconds of lag) or its equivalent on a stream capped at 30FPS to avoid timing headaches.

And if you’re on the risky quest for better performance, you can re-configure quite a few parameters of the video pipeline manually:

Bonus: RTSP fallback server

In the past weeks, I also added a RTSP interface on the streaming device’s side, since this protocol is slightly more standard than our pipeline format, and I thought someone would anyway ask for it at some point.

This enables, in theory, to use e.g. Android or Windows/Apple devices as MirrorHall clients at the expense of considerably worse performance. This is because RTSP is not particularly meant for real-time streaming, it uses TCP, and players like VLC add considerable buffering to ensure smooth (but late) streaming.

So your favourite cone-shaped player can also act as a MirrorHall client… provided you don’t mind a much, much higher lag.

Bonus: (Yes, There Is Also A) CLI

If you’d like to somehow debug or integrate MirrorHall externally, or just don’t like any computer paradigm born beyond the 80s, you can use a tiny CLI that exposes some of Mirror Hall’s core functionality. This is faster as well as a bit stabler in some edge cases, and its usage is as simple as it gets.

mirrorhall cast [ip] [port] [width?] [height?]

-> creates virtual screen and streams it to ip:port

mirrorhall make-mirror [width] [height]

-> creates virtual screen and outputs PipeWire handle

mirrorhall sink [port] [print?]

-> starts accepting virtual streams on [port]

For example, running sink mode yields:

aragorn $ mirrorhall sink 1234

Mirrorhall CLI - version 0.1.0

Tip: run with GST_DEBUG=3 for debugging output

Sink started on port 1234 - press Ctrl+C to stop

Using source: udpsrc port=1234 with caps application/x-rtp, clock-rate=(int)90000, media=(string)video, format=I420, encoding-name=(string)H264, payload=(int)96, stream-format=(string)byte-stream ! queue ! rtph264depay

Best decoder: vaapih264dec

Tip: You can use this command to replicate the pipeline outside of Mirror Hall.

$ gst-launch-1.0 udpsrc port=1234 ! application/x-rtp, clock-rate=(int)90000, media=(string)video, format=I420, encoding-name=(string)H264, payload=(int)96, stream-format=(string)byte-stream ! queue ! rtph264depay ! queue ! vaapih264dec ! queue ! videoconvert ! autovideosink

As a fun fact, you can copy the generated pipeline that you see in the app logs (see above) to any terminal to spawn a GStreamer receiver for Mirror Hall on e.g. a SSH-connected client, and won’t need to install anything aside from gstreamer on the peer machine.

Supported devices

After a bit of work, we have a bunch of stable pipelines for most x86 and ARM devices, often also with hardware-based codecs, plus a stable fallback software codec for more exoteric configurations.

For instance, Mirror Hall is tested to work in both directions on the following devices:

| Source Hardware | Target Hardware | Selected Pipeline | Result |

|---|---|---|---|

| Intel Arc | Intel HD 620 | VAAPI | 🟢️ Stable |

| Intel HD 620 | Vivante GC7000Lite (Purism Librem 5) | x264, libav | 🟢️ Stable |

| Intel HD 620 | Qualcomm Adreno (Poco F3) | VAAPI, libav | 🟢️ Stable |

| Intel HD 620 | VideoCore VI (Raspberry Pi 4) | VAAPI + libav (software) | 🟡️ Fairly stable |

| Intel Arc | Intel HD 620 | openh264 (Flathub) | 🟡️ Stable but slow |

| Intel HD 620 | Qualcomm Adreno (Poco F3) | VAAPI, V4L2 (Flathub) | 🔴️ Frequent glitches |

| Intel HD 620 | AMD Radeon | VAAPI (Flathub) | 🔴️ Occasional glitches |

So although your device is not guaranteed to be supported by the set of pipelines we baked, it should be fine on most devices with hardware acceleration, and still support some degree of efficient software encoding when all else fails.

Limitations and future plans

Needless to say, plenty of things need to be ironed out to make this usable.

In order of priority, these are its main shortcomings:

- Encryption, the lack thereof right now is pretty ugly. We are streaming raw UDP video like it’s 2004, which can be intercepted by virtually anyone snooping the network. This might be solved by implementing something like

srtpencin the pipeline.. - Stability. The video transmission is fast but sensitive to unstable networks, and while being faster, does not really get close to most protocols in terms of reliability for now. Furthermore, it takes a while to resume after a network issue, and does not detect of conflicting streams, so clock issues can arise on busy networks.

- Support for other desktop environments, once appropriate APIs will exist. Similarly, support for automatic DE screen scaling on the virtual sink and for actual mirroring (i.e., not extending the screen) would expand the use cases of this app.

- More standard protocol integrations. We do not expose a clean API for our internal protocol (which, by the way, was formerly called

brokecast), and slower-but-ubiquitous protocols like Miracast or Chromecast could also be useful sinks for this app. If you are in an hotel, that wireless-enabled TV on the wall could be a nice place to stash your secondary windows while you work. - Thunderbolt or Wi-Fi Direct support for painless casting without depending on external routers. At the moment, only postmarketOS devices support USB plug-and-play casting as they normally expose an USB network interface by default.

- Zero-copy and even faster pipeline performance. We could integrate more tightly to other hardware configurations to achieve faster performance, and e.g. use stateless encoding and zero-copy strategies whenever it is supported.

Having said that, the above is more of a loose future wishlist than any tangible goal at the time of writing.

Upstreaming plans

Mirror Hall comes to life in an ecosystem where we don’t lack alternatives for wireless streaming, so in a growingly fragmented Linux app ecosystem, why not extend an existing project?

The issue is, all those I found were too specific to cover this “secondary wireless desktop” use case, and none of them would have accepted support for an oddly specific GNOME / Mutter API integration as a standard feature. Our pipeline is willingly non-standard in that it tries to squeeze as much performance as possible, whereas e.g. Miracast and Chromecast feel very laggy for this use case but are fairly reliable for others.

Moreover, it seems like there is still more interest in “boring” existing display wireless mirroring than in virtual wireless displays, which is why Mirror Hall is probably the first project to do this on Linux.

Having said that, I am happy to think of Mirror Hall as a big demo for the potential of Linux to stream video efficiently. I would be happy to cast Mirror Hall into the fire, as I did with Gestures last year, if upstream work in GNOME Shell or another larger video streaming project made MirrorHall’s use case wholly possible.

In fact, I miss “traditional” wireless streaming as a feature in GNOME Shell, and adding some sort of “Extend with Virtual Screen” switch would at least make Mirror Hall’s server side a breeze. This could work similarly to Apple devices:

But Shell is a notoriously hard project to get new features into, so for now keeping this functionality in a separate app is not only more maintainable, but hopefully allowing users from other desktops to soon benefit from it as well.

Related work

Finally, here are some vaguely related projects for Linux network video streaming that I found while writing this:

- GNOME Network Displays is a simple Miracast streamer for GNOME, which allows to cast any of your integrated displays to nearby TVs.

- DesktopCast is a GStreamer-based Chromecast-like tool for Linux

- This script uses GUD compression over USB to use postmarketOS devices as second screens

- Playercast is a daemon to cast media files between Linux devices using

gstreamerpipelines and mDNS.

But with most existing video tools being strictly asymmetrical, as in either players or streaming tools, it is good for this app to swing both ways.

Epilogue

This was one of my longest-running projects to get to an usable first release, and I learned a lot of things on networks, video streaming, and AV performance by working on it. Although the result is still far from perfect, I am really happy that it is finally out there.

As usual, full-time work eats much of my time and this post got delayed by months since when it was supposed to be released. Thuis until night I was stuck in an airport, surrounded by flooded roads and a huge thunderstorm, when my flight being cancelled until the morning after motivated me to finally get down to finishing this post. Well, almost. That was three months ago already.

I could not thank people like Sonny Piers enough for his encouragement on this project, as well as to Caleb Connolly for helping me debug ARM video performance, and to Rafostar and Robert Mader for providing me some guidance on tuning my pipelines for performance.

So if this post feels a bit more like a “post mortem” now that Mirror Hall 0.1 is out, I would love to not only make this more usable, but also explore wireless Linux desktop sharing further in a performance-conscious and more upstream way.

[0] Only Thunderbolt allows some degree of networking, but in a complicated way and requires bourgeois cables. Also, the PARC Tab was an early mobile computing concept designed for constant synchronization with the nearby environment, which looked like this:

[1] To be entirely honest, the very first set-up I made for this required a physical $1 “dummy HDMI plug” to be plugged into my laptop to spawn a second screen.

[2] Historian’s note: back in 2021, Twitter was a reasonably nice microblogging platform with a beautiful blue bird logo. Anyway, here’s the link you’re after.

[3] This procedure was first documented (quite obscurely) in this GitLab issue.

[4] Currently, only H.264 is supported due to high availability. As efficient H.264 decoders seem to require additional licensing, and cannot be easily used e.g. in Flathub apps, we should probably add support for a less disputed format.